- What is Robots.txt?

- Structure

- Here are some very classic and important commands from the robots.txt file:

- Sitemap et robots.txt

- Robots.txt file generator

- All explanations online

- Also note this very recent indication found on the Net:

What is Robots.txt?

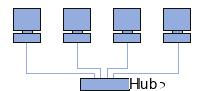

On your site, you try, as much as possible, to ensure that your pages are indexed as well as possible by search engine spiders. But it can also happen that some of your pages are confidential, (or under construction) or in any case that your objective is not to distribute them widely on these engines. A site or a page under construction, for example, does not have to be the target of such aspiration. It is then necessary to prevent certain spiders from taking them into account.

This can be done using a text file, called robots.txt, present on your hosting, at the root of your site. This file will give indications to the engine spider who will want to crawl your site, on what he can or should not do on the site. As soon as the spider of an engine arrives on a site (for example https://monsite.info/), it will search for the document present at the address https://www.monsite.info/robots.txt before 'perform the slightest "document aspiration". If this file exists, it reads it and follows the indications given. If he does not find it, he begins his work of reading and recording the page he has come to visit as well as those which may be linked to it, considering that nothing is forbidden to him.

Structure

There should only be one robots.txt file on a site, and it should be at the site root level. The name of the file (robots.txt) must always be created in lowercase. The structure of a robots.txt file is as follows:

User-agent: *

Disallow: / cgi-bin /

Disallow: /time/

Disallow: / lost /

Disallow: /entravaux/

Disallow: /abonnes/prix.html

In this example:

- User-agent: * means that access is granted to all agents (all spiders), whoever they are.

- The robot will not explore the / cgi-bin /, / tempo /, / perso / and / entravaux / directories of the server nor the / subscribers/prix.html file.

The / temp / directory, for example, corresponds to the address https://mysite.info/ Each directory to be excluded from spider aspiration must have a specific Disallow: line. The Disallow: command allows you to indicate that "everything that begins with" the specified expression must not be indexed.

So :

Disallow: / perso will not allow indexing of either https://monsite.info/ or https://monsite.info/

Disallow: / perso / will not index https://monsite.info/ but will not apply to the address https://monsite.info/

On the other hand, the robots.txt file must not contain blank (white) lines.

The star (*) is only accepted in the User-agent field.

It cannot be used as a wildcard (or as a truncation operator) as in the example: Disallow: / entravaux / *.

There is no field corresponding to the permission, of type Allow :.

Finally, the description field (User-agent, Disallow) can be entered either in lower case or in upper case.

Lines that begin with a "#" sign, ie anything to the right of this sign on a line, is considered a comment.

Here are some very classic and important commands from the robots.txt file:

Disallow: / Used to exclude all pages from the server (no aspiration possible).

Disallow: Allows not to exclude any page from the server (no constraint).

An empty or nonexistent robots.txt file will have the same effect.

User-Agent: googlebot Used to identify a particular robot (here, that of google).

User-agent: googlebot

Disallow:

User-agent: *

Disallow: / Allows the google spider to suck everything up, but denies other bots.

Sitemap et robots.txt

To help Google, Yahoo or others, and especially the engines not giving an interface having the possibility of indicating to them the sitemap file of a site, one can add the indication in the file, using the following syntax:

Sitemap: https://monsite.info/

(more if several sitemap files ...)

for Google or Bing

or also:

Sitemap: https://monsite.info/

more specific to Yahoo ...

Robots.txt file generator

If you want to create a robots.txt file easily, simply, and be sure that it is valid, you can also use a robots.txt generator, like this one for example: robots.txt file generator

All explanations online

The reference site

or for more 'base' definitions:

in French on wikipedia

Also note this very recent indication found on the Net:

A user has just discovered that Google took into account a directive named "noindex" when it was inserted in the "robots.txt" file of a site, such as:

User-agent:Googlebot

Disallow:/perso/

Disallow:/entravaux/

Noindex:/clients/

While the "Disallow" directive tells robots to ignore the contents of a directory (no indexing, no link tracking), "Noindex" would be reduced to not indexing pages, but identifying the links they contain. An equivalent of the "Robots" meta tag which would contain the information "Noindex, Follow" in a way. Google would have indicated that this mention is currently being tested, that it is only supported by Google but that nothing says that it will be adopted in the end. To be used and tested with care therefore ...!

note: the best solution for this "customers" file, remaining to block it via a '.htaccess' which will be valid for all engines ...;)